There is genuinely no end to human depravity. And tech, has always been an enabler, if not a catalyst to the said depraved behaviour, as is evident on any comment section of any social media platform. The latest addition to this nightmare cesspool is the surge in popularity of 'nudify' apps, that utilise AI to virtually undress individuals in photos. Unsurprisingly, this has raised significant alarms across law enforcement and online watch groups. However, it seems we are only at the nascency of this troubling emergence of explicit computer-generated images, particularly those involving minors, remains an unresolved and concerning issue online. Because, no one, especially the police, has any idea how to stop it, since there isn’t any existing regulation against it, apart from the dated privacy & explicit substances laws.

AI - A Cosmic Wrench in the Hands of Primitive Monkeys

Internet, from its very birth, was was a perpetrator of non-consensual pornography, commonly found in Web 1.0 adult sites, targeting public figures. Privacy experts have forever expressed heightened concerns over the ease and enhanced effectiveness of the web to disseminate and distribute these creations amongst millions in a never before speed.

These recent 'nudify' apps exemplify that distressing trend in the development of non-consensual NSFW content, enabled by advancements in artificial intelligence technology. Termed as ‘Deepfakes’, these fabricated media presents serious legal and ethical challenges. Often extracted from social media platforms, these images are distributed without the consent, control, or even the knowledge of the individuals depicted.

Recent research by Graphika has unveiled a staggering user base, with over 24 million individuals visiting AI-powered nudity websites in September of 2023 alone. These platforms employ deep-learning algorithms to digitally manipulate images, predominantly of women & underage users, portraying them as unclothed, regardless of the original context of the photo. The proliferation of spam advertisements across major platforms has contributed to a drastic surge, with site and app visits increasing by more than 2,000 percent since the start of 2023.

This worrying trend is prevalent on various social media platforms such as Google's YouTube, Reddit, and X, with an additional discovery of 52 Telegram groups facilitating access to non-consensual intimate imagery (NCII) services.

The escalation in the usage of such apps aligns with the release of numerous open-source diffusion models, representing artificial intelligence capable of generating images surpassing the quality of those created just a few years ago. The accessibility of these open-source models to app developers, free of cost, has contributed significantly to the proliferation of such concerning technologies.

Virtual Crimes with Real Victims

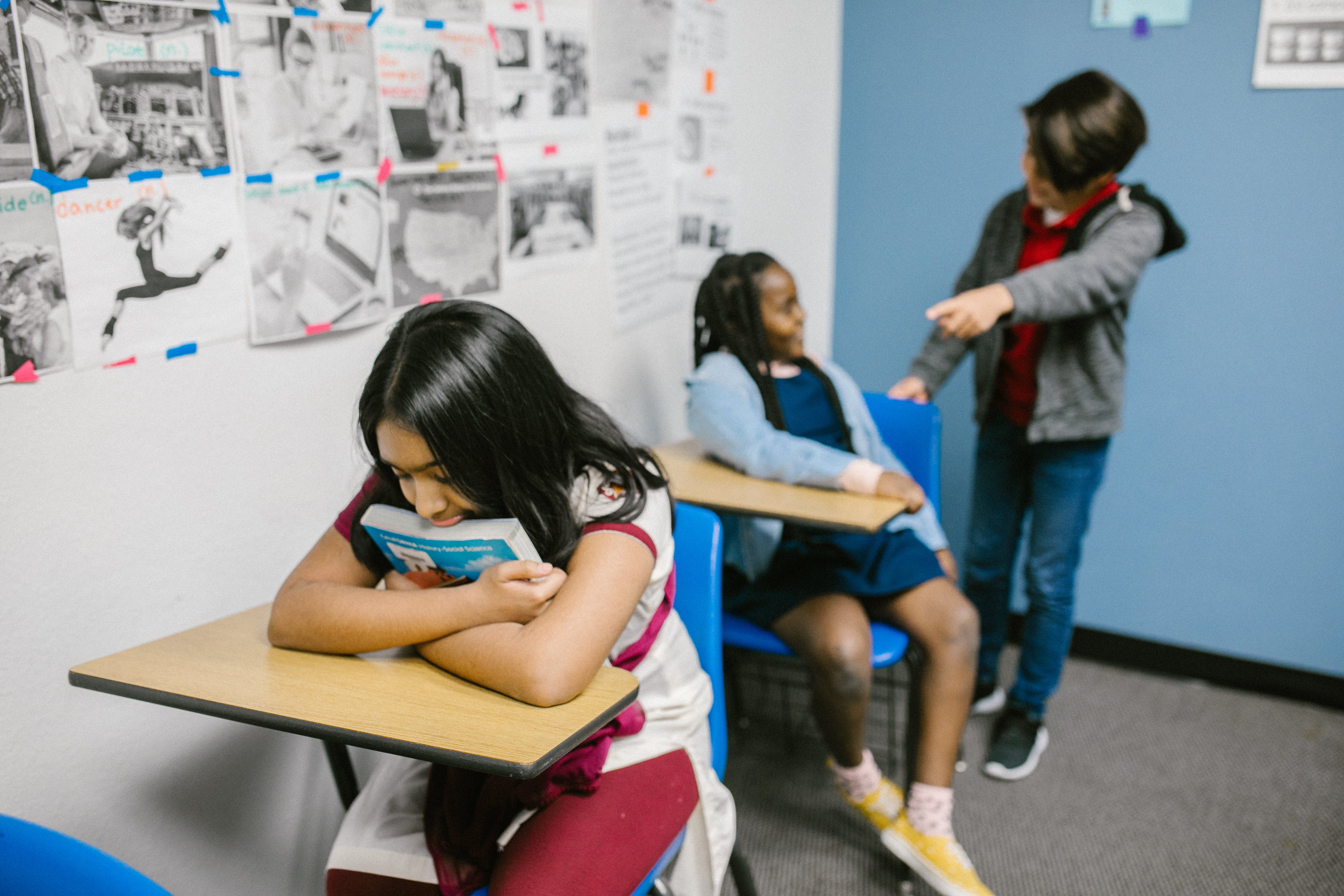

Even though this seems like a online problem, the repercussions of these kind of trends leave behind real life victims. Sometimes, it’s a prank taken to a criminal degree, other times it’s a well thought of harassment tactic, used either to shame, bully or blackmail the victims.

To cite and example, during the commencement of the school year in Almendralejo, Spain, disquieting reports surfaced among teenage girls recounting unsettling encounters with peers who claimed to have seen nude photos of them. Concerned parents quickly convened on a WhatsApp group, discovering that a distressing number—about 20 girls—had fallen prey to similar experiences. Law enforcement stepped in, initiating an investigation into the matter and identifying potential culprits suspected of distributing this content. Remarkably, these suspects were likely fellow teenagers, leading the case to be handled by a Juvenile Prosecutor’s Office. This didn’t just destroy the lives of the ones creating such abominable content, but also the ones who were targeted and had no idea about it until confronted with the same.

The disturbing trend of 'revenge porn’ involving the dissemination of explicit content acquired through hacking or shared in confidence but later leaked without consent, is sadly not new among adolescents. However, this particular incident bore an unsettling twist—though appearing authentic, the images were, in fact, the product of a generative AI program.

Tragically, this occurrence is not isolated. In the United States, educational institutions have grappled with incidents where students employed AI-generated nude imagery as a means of bullying and harassment. A distressing case arose in Muskego, Wisconsin, where the local police discovered that several middle school girls, believing they were communicating with a 15-year-old boy on Snapchat, ended up sending images of themselves. Shockingly, this individual turned out to be a 33-year-old man who coerced them into sending explicit photos, threatening to manipulate their original images with AI technology to fabricate sexual content and circulate it among their acquaintances. Earlier in the year, the FBI issued a warning regarding an increase in the use of AI-generated deepfakes for "sextortion" schemes, noting reports from victims, including minors.

Silicon Valley and the Perpetual Game of Shifting Blames

The sprawling landscape of Silicon Valley finds itself entangled in a perpetual game of shifting blames, a complex saga where accountability morphs amidst contentious issues.

X (previously Twitter), a towering presence in Silicon Valley, has found itself embroiled in the pressing concern of combatting CSAM (Child Sexual Abuse Material) over the past year. In a post-takeover phase of the now-renamed Twitter, Musk, freshly at the helm, vocalised a shift in focus, declaring the removal of exploitative content involving children as "priority #1." This pivot diverged from his prior fixation on addressing bots, yet resonated strongly with factions on the right, despite the misleading notion that this eradication had already occurred. Musk opportunistically claimed credit for actions not taken, even erroneously accusing previous Twitter leadership of neglecting the issue for an extended duration. Ironically, while championing this cause, Musk was actively dismantling the pre-existing child safety team upon his arrival.

Expectedly, CSAM has continued to plague X's platforms throughout 2023, as reported by The New York Times. Content deemed "easiest to detect and eliminate" by authorities continues to circulate rampantly, sometimes even receiving algorithmic boosts. Additionally, Twitter curtailed financial support for specific detection software previously aiding moderation endeavours.

The year 2023 witnessed a staggering surge of over 2,400% in links promoting undressing apps across social media platforms, including X and Reddit. These services leverage AI to digitally undress individuals, predominantly targeting women.

Addressing these concerns, a spokesperson from Google iterated their policy against sexually explicit ad content, asserting their ongoing efforts to remove violative ads. However, YouTube tutorials demonstrating the functionality of 'nudify' apps or endorsing specific applications persist on the platform under a cursory search for 'AI nudity app.'

Reddit, in response to research findings, underscored its prohibition of non-consensual dissemination of fabricated sexually explicit material, leading to the ban of several domains. Conversely, X remained silent on requests for commentary.

TikTok, recognising the association between the term "undress" and objectionable content, took a proactive stance by blocking the keyword. This action serves as a cautionary message to users that such searches may breach the app's guidelines. Similarly, Meta Platforms Inc. commenced blocking keywords linked to the quest for undressing apps, refraining from further elaboration when queried by reporters.

Is There Really a Solution to this Deluge?

Women and children, are already the most harassed, manipulated and victimised in real life. The social media and tech enables that very abuse to translate online, only in a much worse, anonymous and accelerated manner. For them, it is an everyday thing; and nothing could be a sadder testimony than that about the state of our society in general.

The distressing surge of AI 'nudify' apps serves as a poignant reminder of the intersection between virtual and real-life harassment. The struggle against privacy infringement, exploitation, and coercion in the digital realm reflects the uphill battle individuals face in navigating their daily lives. Urgent and collective action from legislators, tech companies, and society at large will happen when it does.

Till then, the imperative to curb these threats and foster a safer, more respectful digital environment for all individuals lies on all of our shoulders. We need to remember, that the victims are innocent, and they should be treated as such - with love, respect and empathy.

Ⓒ Copyright 2023. All Rights Reserved Powered by Vygr Media.