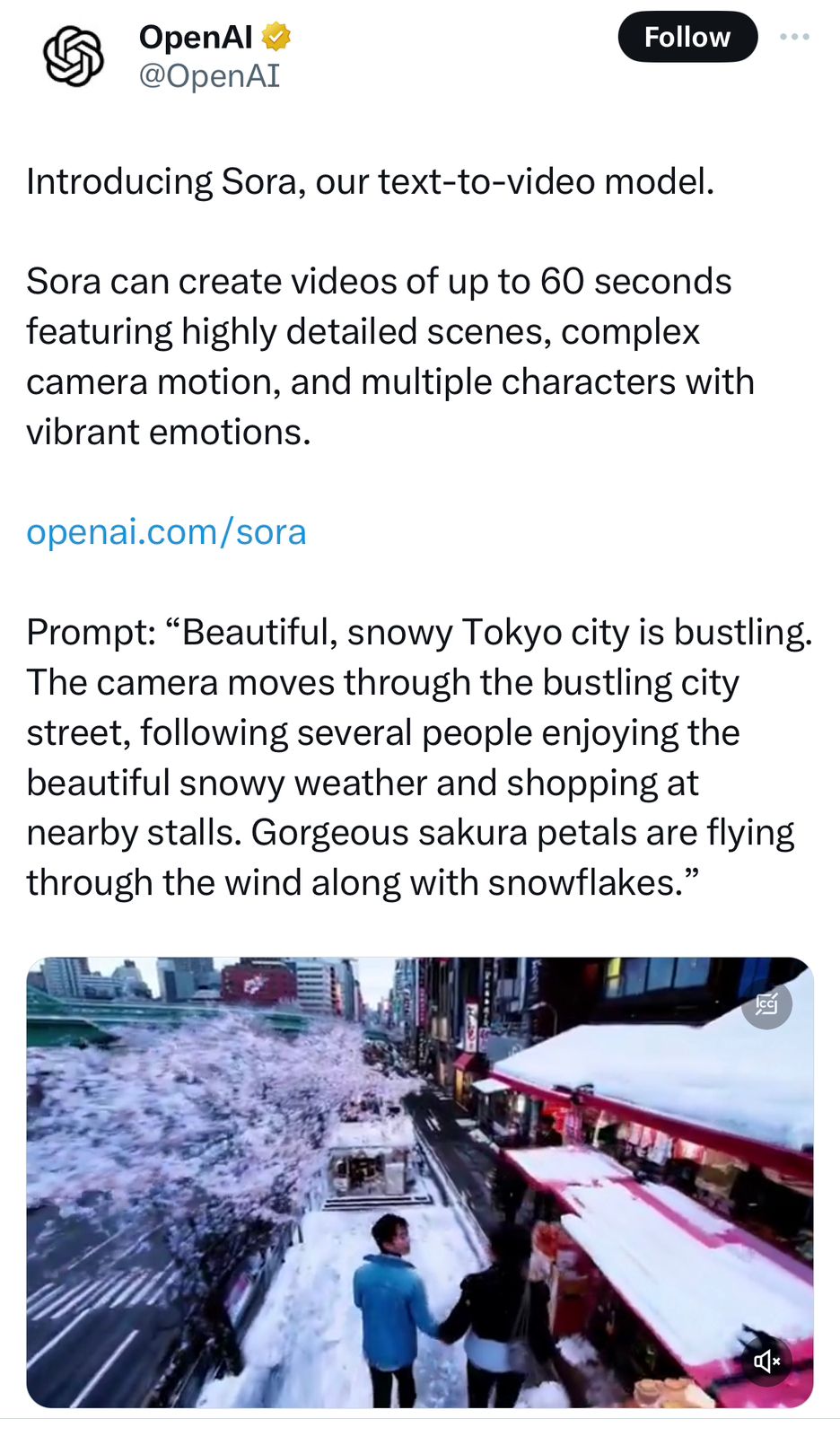

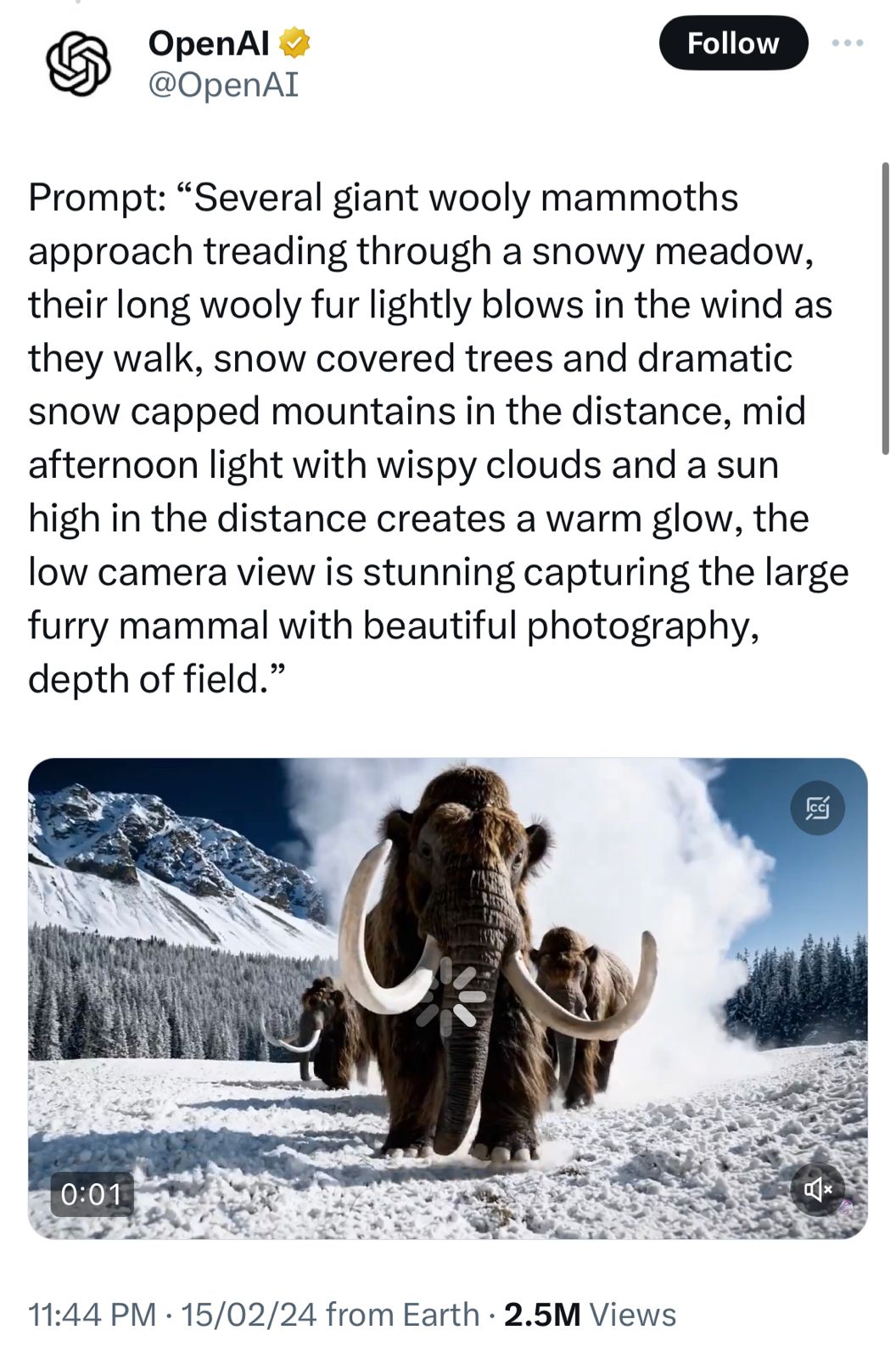

On Thursday, OpenAI, the creator of ChatGPT, launched Sora, an Artificial Intelligence model capable of transforming text prompts into realistic videos. ‘Sora’ in Japanese means sky.

According to OpenAI, Sora can produce realistic videos up to a minute, depicting complex scenes with multiple characters, specific types of motion, and accurate details of the subject and background after taking instructions from a user on the style and subject of the clip.

The company also claimed that the model can understand how objects “exist in the physical world”.

The model's deep understanding of language allows it to“accurately interpret props and generate compelling characters that express vibrant emotions”. Additionally, Sora can animate still images within a single generated video and extend existing videos or fill in missing frames.

OpenAI said, “We’re teaching AI to understand and simulate the physical world in motion, with the goal of training models that help people solve problems that require real-world interaction,”

Sora is currently undergoing development, and prior to its public release, OpenAI has provided access to researchers, visual artists, designers, and filmmakers. This access aims to evaluate potential risks and harms and “to gain feedback on how to advance the model to be most helpful for creative professionals.”

OpenAI stated, "We're releasing our research progress early to collaborate with and receive feedback from individuals outside of OpenAI, providing the public with insight into upcoming AI capabilities."

CEO Sam Altman on X, announced, "Today, we are initiating red-teaming and granting access to a limited number of creators.”

OpenAI stated, "We are collaborating with red teamers, experts in domains such as misinformation, hateful content, and bias, who will conduct adversarial testing of the model."

"We're developing tools to identify misleading content, including a detection classifier capable of recognizing videos generated by Sora. Our text classifier will be integrated into OpenAI products to assess and reject text input prompts that violate our usage policies, such as those soliciting extreme violence, sexual content, hateful imagery, celebrity likeness, or intellectual property of others."

The videos will also feature a watermark indicating they were created by AI. OpenAI plans to incorporate C2PA metadata in the future if the model is deployed in an OpenAI product. The company emphasised its intention to utilize existing safety protocols in products using DALL·E 3, which are applicable to Sora as well.

But OpenAI warned that “Despite extensive research and testing, we cannot predict all of the beneficial ways people will use our technology, nor all the ways people will abuse it.”

However, the current version of Sora has limitations, particularly in accurately simulating complex physics scenes and understanding specific cause-and-effect instances.

According to OpenAI, the model might also have trouble with spatial features, such as not being able to distinguish between left and right, and it might also have trouble accurately describing events that happen over time, like tracking a particular camera trajectory.

Before Sora, with the addition of AI-based capabilities to its image generation model Emu last year, Meta was able to edit and produce movies in response to text prompts. Similarly, Google unveiled Lumiere, a new AI-powered application, which was announced last month, that can create five-second videos on a given prompt, both text- and image-based. Other companies like Runway and Pika have also shown impressive text-to-video models of their own.

©️ Copyright 2024. All Rights Reserved Powered by Vygr Media.