In a major leap forward for AI reasoning and multimodal understanding, OpenAI has unveiled two groundbreaking models — o3 and o4-mini — which mark a significant evolution in the capabilities of ChatGPT. These models introduce built-in agentic abilities, enabling them to autonomously decide when and how to use various tools like web search, Python code execution, image analysis, file interpretation, and image generation — all within a single conversation flow.

The release of these models comes just days after the debut of OpenAI’s GPT-4.5 API, highlighting the company’s fast-paced innovation strategy. In addition to offering enhanced reasoning and performance, the o3 and o4-mini models represent a new step toward OpenAI’s vision of a truly autonomous AI assistant.

What Are Agentic Abilities in o3 and o4-mini?

OpenAI describes the new "agentic" capabilities as the models' native capacity to reason through multi-step problems by not only understanding language but also deciding which tools to use and when to use them. For instance:

-

If a task requires a live web search, the models can initiate that themselves.

-

If a file or image needs to be interpreted, the models can choose to use the appropriate tools.

-

They can chain these tools together within a session for complex, layered tasks.

This enables a more human-like workflow, where the models autonomously combine tools to produce structured, high-quality answers.

o3 Model: OpenAI’s Most Advanced Reasoning System Yet

Described by OpenAI as its "smartest and most capable model yet", the o3 model excels across a wide range of domains:

-

Coding and software development

-

Mathematics and logic

-

Scientific problem-solving

-

Visual understanding, including analysis of images, diagrams, and charts

According to OpenAI, the o3 model is ideal for tackling multi-layered, non-obvious problems, and is now the company’s flagship reasoning model in the ChatGPT suite.

Key Features of o3:

-

Superior performance in complex, multi-step tasks

-

Deep understanding across text and visuals

-

Integrates seamlessly with tools like web browsing and code execution

-

Can interpret and manipulate uploaded images as part of its thought process

“Thinking with Images” has been one of our core bets in Perception since the earliest o-series launch. We quietly shipped o1 vision as a glimpse—and now o3 and o4-mini bring it to life with real polish. Huge shoutout to our amazing team members, especially:

- @mckbrando, for… https://t.co/WzqoOl8EwW — Jiahui Yu (@jhyuxm) April 16, 2025

o4-mini: High-Speed, Cost-Efficient Reasoning

While o3 is focused on raw capability, the o4-mini is engineered for efficiency and accessibility. Despite its smaller size, o4-mini performs impressively in core areas such as:

-

Math and logic

-

Coding and data science

-

Visual reasoning

It also offers a significantly higher usage limit than o3, making it ideal for high-throughput environments and frequent usage scenarios.

Advantages of o4-mini:

-

Optimized for speed and cost

-

Delivers solid performance in demanding use cases

-

A great option for scaling AI access across teams

-

Outperforms the older o3-mini model in several benchmarks

o3 and o4-mini are super good at coding, so we are releasing a new product, Codex CLI, to make them easier to use.

this is a coding agent that runs on your computer. it is fully open source and available today; we expect it to rapidly improve. — Sam Altman (@sama) April 16, 2025

Visual Thinking: AI That Doesn’t Just See—It Thinks

A major innovation in both o3 and o4-mini is their ability to include images directly in their chain of thought.

“They don’t just see an image—they think with it,” OpenAI stated.

This means that users can now upload:

-

Photos of whiteboards

-

Diagrams from textbooks

-

Hand-drawn sketches

Even low-resolution, blurred, or reversed images can be interpreted accurately. The models can also use built-in tools to transform images in real-time, such as rotating, zooming, or enhancing visuals, all as part of their reasoning workflow.

Codex CLI: A New Coding Agent for Developers

Recognizing the enhanced coding abilities of the new models, OpenAI also launched Codex CLI, a standalone open-source coding agent that runs locally on a user’s computer. It’s designed to work seamlessly with o3 and o4-mini, enabling more hands-on, programmable interactions.

According to OpenAI CEO Sam Altman:

“Codex CLI is fully open source and available today; we expect it to rapidly improve.”

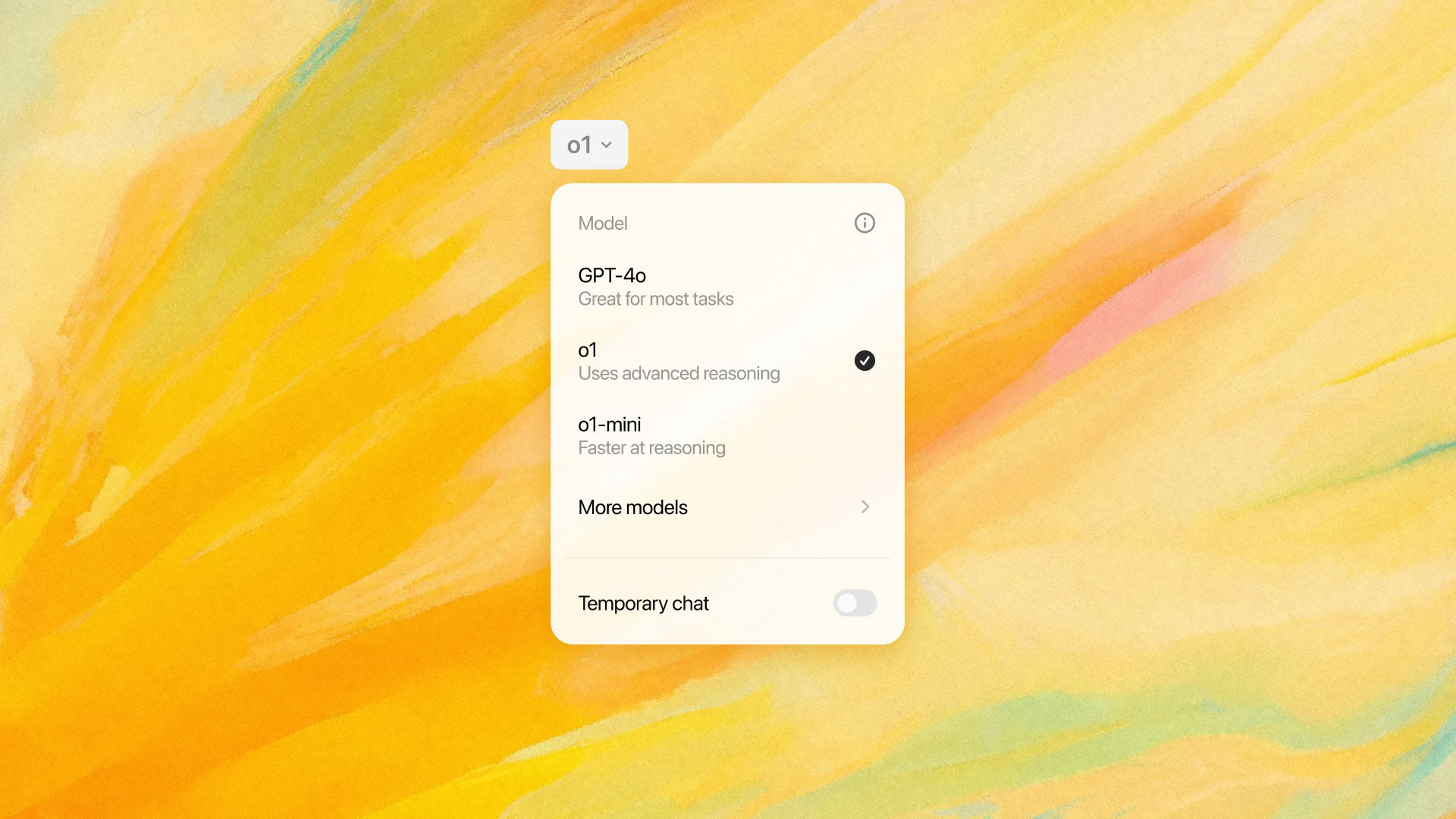

Model Access and Availability

OpenAI has rolled out access to o3 and o4-mini across multiple user tiers:

For ChatGPT Users:

-

Plus, Pro, and Team users can now access:

-

o3

-

o4-mini

-

o4-mini-high

-

-

Free-tier users can try o4-mini by selecting “Think” in the ChatGPT composer

For Enterprise and Edu:

-

ChatGPT Enterprise and Edu customers will receive access within a week

For Developers:

-

o3 and o4-mini are now available via:

-

Chat Completions API

-

Responses API

-

Naming Confusion and What’s Next

OpenAI’s evolving model names have created some confusion among users, a point CEO Sam Altman humorously acknowledged on X:

“How about we fix our model naming by this summer, and everyone gets a few more months to make fun of us (which we very much deserve) until then?”

Despite the naming inconsistencies, OpenAI confirmed that a more capable o3-Pro model is currently in development and will be released to Pro users in the coming weeks.

It is exciting how things are being done around the internet now. For instance, in an age where content is consumed across multiple platforms and on the go, the demand for quick, reliable, and accessible video downloaders has grown tremendously. Among the many options available, Y2Mate is a popular, free online tool allowing users to download YouTube videos in various formats, particularly MP4 (video) and MP3 (audio). Whether you’re a student looking to save educational videos offline or a music lover collecting your favourite tracks, Y2Mate offers a simple and efficient solution.

How Y2Mate Works, you ask?

Using Y2Mate is refreshingly straightforward. You don’t need to install any software or create an account. Here’s how it works:

-

Copy the YouTube Video URL: Navigate to the YouTube video you want to download and copy the link from your browser's address bar.

-

Paste in Y2Mate: Go to the Y2Mate website and paste the copied link into the provided search bar.

-

Select Format and Quality: Once the video is processed, you’ll see a list of format options such as MP4 and MP3. For video, you can select from different quality levels like 360p, 720p, or 1080p, depending on the original video’s resolution.

-

Download: Click the download button next to your preferred format and quality. The file is then prepared and available for direct download to your device.

Key Features of Y2Mate

-

Multi-Format Support: Y2Mate supports a wide range of formats, with MP3 for audio and MP4 for video being the most common. This allows for flexibility depending on what you want to do with the file—listen or watch.

-

High-Resolution Downloads: Users can download videos in HD quality (up to 1080p) when available, ensuring that playback remains sharp and enjoyable.

-

No Registration Required: One of the biggest advantages is that Y2Mate doesn’t require users to sign up, making the process fast and hassle-free.

-

Browser-Based Interface: Since it’s an online tool, there’s no need for installations or plugins, reducing the risk of malware or bloatware on your device.

-

Compatibility: Y2Mate works across all major browsers including Chrome, Firefox, Safari, and Edge, and is compatible with both desktop and mobile devices.

-

Fast Conversion Speeds: Depending on your internet connection, Y2Mate processes and converts files relatively quickly, with minimal waiting time.

But here is a disclaimer from us - While Y2Mate is a convenient tool, users should be cautious about occasional pop-up ads and potential redirects. It’s also important to ensure that video downloading complies with copyright laws and YouTube’s terms of service. Downloading copyrighted content without permission may be illegal in your jurisdiction. Y2Mate is an efficient and accessible tool for downloading YouTube content in MP4 and MP3 formats. Its intuitive interface, fast download speeds, and broad compatibility make it a top choice among free video downloaders. While users should be mindful of legal considerations, for personal use and offline access, Y2Mate continues to be a reliable go-to platform.

A Major Leap Toward Autonomous AI Agents

The launch of o3 and o4-mini marks a turning point in OpenAI’s roadmap—transforming ChatGPT from a static language model into a more dynamic, tool-using AI agent capable of multimodal reasoning, coding, and autonomous problem-solving.

With Codex CLI and improved accessibility across subscription tiers, OpenAI is not only pushing the boundaries of what AI can do — it’s also democratizing access to intelligent agents that can collaborate with humans more fluidly than ever before.

As OpenAI continues to iterate on these agentic models, the line between simple chatbot and full-fledged AI assistant continues to blur — and the future of work, creativity, and learning may never be the same.

With inputs from agencies. This article also mentions sponsored content, which the journalist as well as the organisation are not responsible for. User discretion is advised.

Image Source: Multiple agencies

© Copyright 2025. All Rights Reserved Powered by Vygr Media.