Artificial Intelligence (AI) has ushered in a new era of innovation and efficiency. From streamlining work tasks and enhancing research to powering life-like conversations with chatbots, the AI revolution is well underway. However, beneath the promises of productivity lies a growing environmental dilemma. The energy-guzzling engines that drive modern AI—particularly generative AI like ChatGPT, DALL-E, and Gemini—are exacting a significant toll on our planet.

As AI continues its meteoric rise, questions mount: Can we reconcile AI’s transformative potential with the urgent need for environmental sustainability? Or are we trading one crisis for another?

_1754581722.webp)

The Environmental Toll of Generative AI

1. Massive Energy Consumption: The Core of the Problem

Training large language models (LLMs) like GPT-3 or GPT-4 requires massive computational power—and with it, an equally massive demand for electricity. These models contain billions of parameters, and the electricity required to train them can power hundreds of U.S. homes for a year.

A 2021 study estimated that training GPT-3 used around 1,287 megawatt hours of electricity—equivalent to the annual consumption of 120 homes—and released 552 tons of CO₂. Even more startling, a University of Massachusetts study revealed that training certain large AI models produced 626,000 pounds of CO₂—roughly the same as 300 round-trip flights from New York to San Francisco.

But it doesn't stop there. The energy use persists long after training, as millions of people engage with AI daily. A single ChatGPT query consumes approximately 4.32 grams of CO₂e, about five times the energy of a standard Google search. Multiply that by millions of daily interactions, and AI’s carbon footprint becomes staggering.

2. The Rise of Energy-Hungry Data Centers

The infrastructure powering AI—data centers—is growing at an alarming pace. These temperature-controlled facilities house tens of thousands of servers, each drawing energy 24/7.

-

Between 2019 and 2023, power consumption by data centers rose by 72%, driven heavily by generative AI workloads.

-

In 2022, global data centers consumed around 460 terawatt-hours of electricity—enough to rank them as the 11th largest consumer of electricity worldwide, just behind France.

-

By 2026, that figure is projected to exceed 1,000 terawatt-hours, potentially making data centers the 5th largest electricity consumer globally, between Japan and Russia.

3. Water Usage: The Often-Overlooked Cost

Energy isn’t the only resource AI demands—water use is soaring too. Cooling data centers requires chilled water systems that absorb and remove the heat generated by constant computing.

-

It is estimated that for every kilowatt-hour of electricity consumed by a data center, two liters of water are needed for cooling.

-

Training GPT-3, for example, used approximately a 16-ounce bottle of water for every 10–50 queries—a small amount per user, but astronomical in aggregate.

By 2027, AI’s annual water withdrawal is expected to reach 6.6 billion cubic meters, raising concerns over strain on local water supplies and biodiversity.

4. E-Waste and Hardware Emissions

AI’s growing influence has fueled demand for high-performance computing hardware like GPUs, which require complex manufacturing processes and rare earth materials.

-

The e-waste generated contains hazardous chemicals (e.g., lead, mercury, cadmium) that threaten ecosystems if not properly disposed of.

-

In 2023, TechInsights estimated that NVIDIA, AMD, and Intel shipped 3.85 million GPUs to data centers, up from 2.67 million in 2022.

-

Manufacturing these chips involves high emissions, toxic processing chemicals, and unsustainable mining practices.

According to the World Economic Forum, global e-waste could exceed 120 million metric tonnes by 2050—a growing concern if AI’s hardware needs continue unchecked.

Balancing Innovation and Sustainability: A Green Dilemma

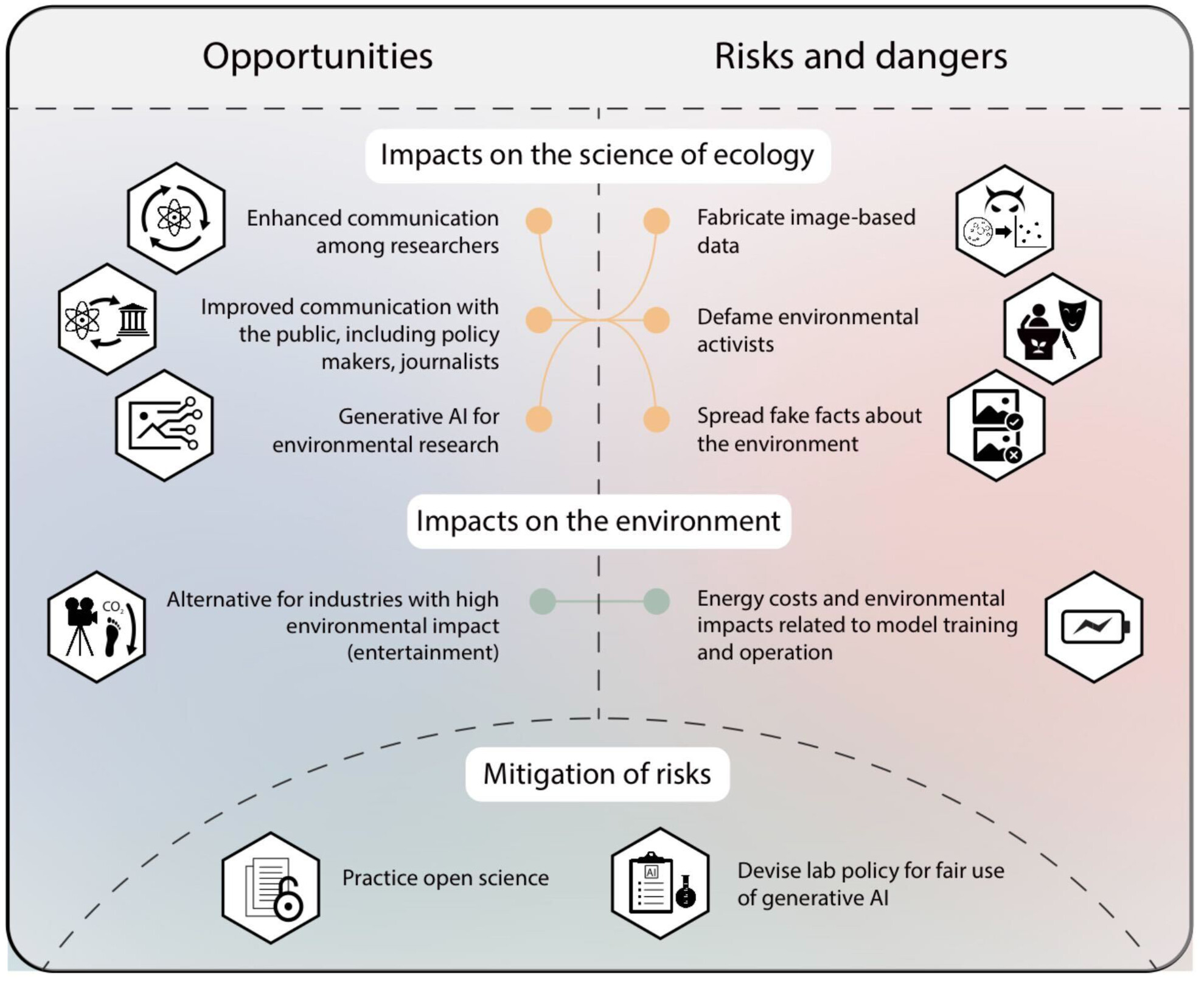

The environmental challenges posed by AI are clear—but so is AI’s potential to help fight climate change. It's a double-edged sword, and the path forward requires nuance, regulation, and innovation.

Where AI Harms the Environment

-

Carbon emissions from model training, inference, and data center operations

-

High water usage for cooling hardware

-

E-waste from rapid hardware upgrades

-

Harm to natural ecosystems from AI-driven automation in industries like agriculture and logistics

-

Overconsumption of resources due to AI-driven convenience (e.g., same-day deliveries)

Where AI Can Help the Environment

-

Predicting extreme weather and climate risks

-

Modeling climate scenarios to inform policy and investment

-

Optimizing energy grids and resource allocation

-

Accelerating sustainable material development

-

Reducing industrial carbon footprints through demand forecasting and operations optimization

Ethical Risks and the Need for Transparency

AI systems are often trained on opaque datasets with unknown carbon footprints. The lack of transparency from many tech companies makes it difficult to evaluate the full scope of AI’s environmental cost.

Moreover, if AI systems are optimized to prioritize economic gain over environmental sustainability, they can perpetuate harmful outcomes—including the degradation of ecosystems and unfair resource allocation.

The Path to Sustainable AI: What Needs to Change

1. Optimize Energy Usage

Companies must invest in energy-efficient hardware, such as low-power GPUs, and smarter algorithms that require less compute during training and inference.

Using pre-trained models, batch processing, and model compression can drastically reduce compute demands.

2. Transition to Renewable Energy

Tech giants are starting to lead:

-

Amazon now matches 100% of its energy usage with renewable sources.

-

Microsoft and its suppliers are targeting 100% carbon-free electricity by 2030.

-

Google Cloud commits to carbon-free data center operations by the end of the decade.

-

OpenAI has invested in Exowatt, a solar-powered startup designed to power AI data centers cleanly.

Yet more transparency and broader adoption are urgently needed.

3. Reduce E-Waste and Improve Recycling

-

Enforce ethical e-waste disposal standards.

-

Strengthen regulations for hardware recycling and material sourcing.

-

Promote modular hardware to extend equipment life.

4. Improve Emissions Tracking

Companies must track their emissions accurately and categorize them:

-

Scope 1 & 2: Direct emissions from owned infrastructure

-

Scope 3: Indirect emissions from third-party services (e.g., AWS, Azure)

Tools like cloud provider dashboards, carbon calculators, and ISO 42001/14001 certifications can support better monitoring.

5. Locate Data Centers Strategically

Building data centers in areas with low carbon intensity grids (e.g., Norway, Canada) and leveraging efficient cooling systems can significantly reduce emissions.

6. Foster Sustainable Culture

-

Educate employees and users on AI’s environmental costs.

-

Source sustainability initiatives from internal teams.

-

Partner with green vendors and demand transparency from suppliers.

AI and the Planet Can Coexist—If We Act Now

The rise of AI is not inherently incompatible with environmental sustainability—but the current trajectory is deeply troubling. With AI's environmental impact poised to rival entire nations, it's time to rethink what innovation means in the age of climate urgency.

This is a green dilemma we cannot ignore. While AI can be a vital tool in climate resilience and mitigation, it must also be held accountable for its carbon footprint, water use, and material impact.

The call to action is clear: developers, companies, regulators, and consumers must work together to ensure that AI innovation doesn’t come at the planet’s expense. From carbon-neutral data centers to responsible e-waste management, the solutions are within reach.

The future of AI—and our environment—depends on whether we choose to pursue them.

With inputs from agencies

Image Source: Multiple agencies

© Copyright 2025. All Rights Reserved. Powered by Vygr Media.