The world is waiting closely to see if the US will impose more stringent restrictions on China's ability to obtain cutting-edge AI software. This is a shot across the bow in the high-stakes race for worldwide technology supremacy, not merely a change in policy. This choice could completely change the course of history, so fasten your seatbelt.

The crux of the issue

The US Commerce Department's proposal to control the export of potent AI models—the very engines that drive AI's astounding capabilities, such as processing enormous datasets and creating complex content—is at the heart of the problem. There is a lot in the queue. If these instruments fall into the wrong hands, they might become cyberwarfare weapons or, worse, encourage the emergence of biological hazards.

Secretary of Commerce Gina Raimondo said,

“The Commerce Department plays a pivotal role in the U.S. government’s approach of seizing the potential that comes with the development of advanced AI, while mitigating dangerous capabilities or risks to safety. Today’s executive order reaffirms that leadership as our Department prepares to undertake significant responsibilities to carry out the President’s vision to build a safer, more secure world,”

“Building on the voluntary commitments secured from leading American companies earlier this year, the President is taking a critical step forward to facilitate and incentivize safe and responsible innovation in AI.”

Why Now?

To impede China's technological advancement, the US has already placed restrictions on the export of several sophisticated AI processors to that country. However, current laws are lagging behind as AI develops at a dizzying rate. These new regulations are intended to preserve America's technological advantage, akin to a critical software update.

The Control Room: AI's Underbelly

The specifics of these limitations entail putting a cap on the amount of computing power required to train an artificial intelligence model. You will have to tell the Commerce Department about your AI development intentions if your model performs better than this standard. It will be determined by this key threshold when AI models become entangled in export regulations.

The Quiet Front: The Importance of Cybersecurity

These days, cyberspace is our unseen battlefield, and the US's planned restrictions on AI software demonstrate how seriously they take digital defence. Cyberattacks might be boosted by AI models with the kind of power that can sift through data at a never-before-seen scale, like the one powering ChatGPT.

The risks in cyberspace

- Threats Powered by AI: The Department of Homeland Security issues a warning that advanced cyberattack instruments may be released by AI. Consider malware that replicates itself or artificial intelligence (AI)-driven phishing campaigns that are incredibly hard to identify.

- Risks of Weaponization: Many security professionals are plagued by the terrifying thought that artificial intelligence could be used to create biological weapons.

- Risks to Privacy: AI's aptitude for data processing could be used to steal a lot of people's personal data. Consider your complete social media history, bank statements, and medical records up for grabs on the dark web.

Examples from the Real World: When Cybersecurity Fails

To put these worries in perspective, let's look at a few recent cybersecurity instances that demonstrate why they are more than simply hypothetical.

- Iranian Hackers on the Loose: Sensitive documents were leaked by Iranian hackers who broke into an IT network linked to an Israeli nuclear plant. Not nice.

- Russian Phishing Frenzy: Using ransomware-laced bogus correspondence, Russian hackers attacked German political parties in phishing attempts.

- India Under Attack: Malicious files disguising themselves as official correspondence led to the penetration of India's government and energy sectors through a cyber espionage effort. This is the best kind of deception.

- China's Targeting Strategies: With the intention of stealing IP addresses and locations, Chinese hackers targeted Italian MPs and EU members of the Inter-Parliamentary Alliance on China. Spooky things.

- Canada's Financial Difficulties: A cyber event brought attention to the vulnerability of essential infrastructure when the country's financial intelligence system, FINTRAC, went offline.

- The National Cyber Security Centre of Switzerland has verified a data breach that resulted in the exposure of confidential personal information, classified material, and even government agency passwords, a serious security breach.

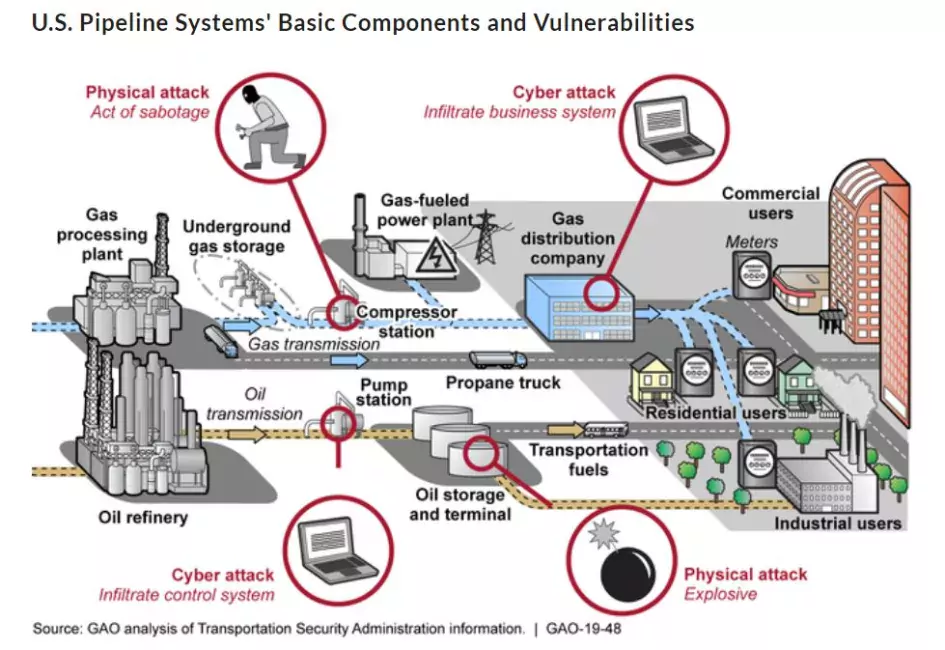

Cyberattacks are not unheard of in the US: The US itself has felt the sting of cyberattacks.

- Recall the 2021 Colonial Pipeline hack, which caused a petroleum crisis throughout the eastern United States? Yes, that was the outcome of fundamental cybersecurity errors. It demonstrates how important it is to get this correct.

- Microsoft Source Code Theft: As part of a massive espionage effort, Russian, Chinese, and North Korean hackers broke into Microsoft's internal networks and took over the company's source code. Ouch!

- Ransomware Rampage: With hackers demanding millions of dollars to open compromised systems, ransomware attacks have grown to be a serious danger to US infrastructure. Again, yikes!

"We can't have non-state actors or China or folks who we don’t want accessing our cloud to train their models," Secretary of Commerce Gina Raimondo said in an interview with Reuters. "We use export controls on chips," she noted. "Those chips are in American cloud data centers so we also have to think about closing down that avenue for potential malicious activity."

_1715447745.png)

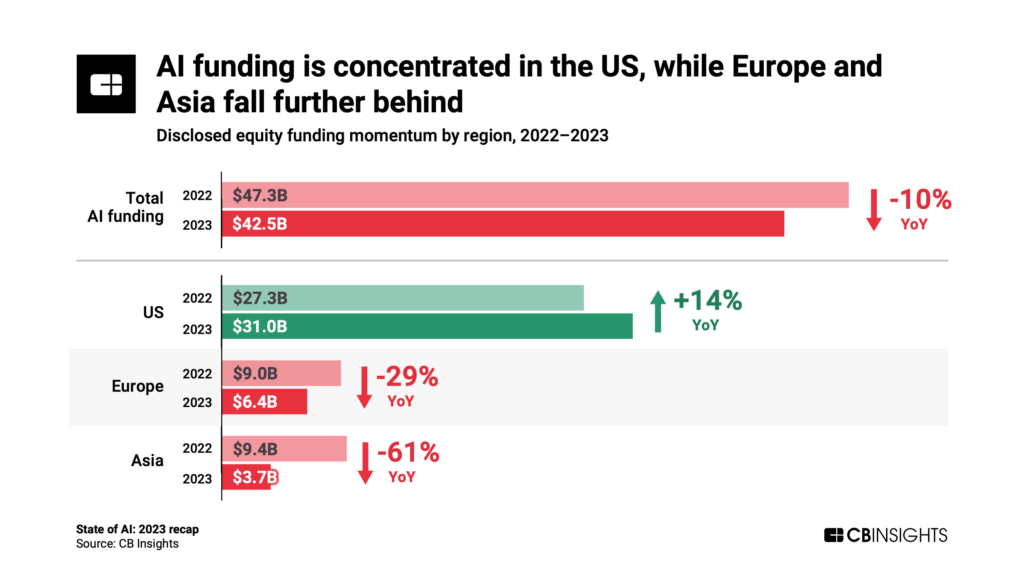

The Money Matters: AI by the Numbers

The suggested controls have enormous financial ramifications. The Department of Defence (DoD), which has made significant investments in contracts connected to AI, is spearheading the US government's massive funding spree for AI research. China, on the other hand, is expected to have invested at least $70 billion by 2020 in AI research and development, compared to an estimated $12 billion in 2017 spending. There's a lot of money flowing into this tech race. By 2030, the AI market is predicted to be worth an astounding $15.7 trillion worldwide, and the US and China are fighting for a commanding 70% share of that market. There are huge financial rewards in this race, but there is also a lot of risk involved.

The US Concerns: More Than Just Hacks

Beyond cybersecurity, the United States has particular worries about China's access to cutting-edge AI technologies.

- Applications in the Military: There is great concern that China may use cutting-edge AI to improve its military prowess and cyberwarfare potential. Imagine AI-powered autonomous weaponry or AI-driven hacks that destroy vital infrastructure. A terrifying idea.

- State of Surveillance: The US is reluctant to provide AI technology to China to bolster its already-strong system of surveillance. If sophisticated AI is used to create an ever-more-complex system for monitoring and controlling citizens, human rights concerns may surface.

- Economic Espionage: China's potential use of advanced artificial intelligence to steal intellectual property and carry out industrial espionage worries the United States. Imagine China using AI-powered technologies to breach US networks and steal sensitive data, giving them an unfair economic advantage.

- Global Influence: The US is concerned that China's hold on cutting-edge AI could lead to an expansion of its influence worldwide and the undermining of the current free international order. Globally, artificial intelligence has the power to propagate misleading information, change public opinion, and undermine democratic values.

- Data Privacy: There is growing concern about how the Chinese government is handling data privacy and about the potential misuse of AI for broad public surveillance. Imagine living in a society driven by AI where face recognition technology is used to track and monitor every move people make.

Beyond Cybersecurity

The proposed AI export limitations have far-reaching repercussions, even as cybersecurity remains a top priority. An example of the ripple effects is shown below:

- Economic Consequences: Exporting less AI software could make the US less competitive in the AI industry worldwide. Consider the possible loss of employment and the missed chances. for innovation.

- Innovation Barriers: These regulations may hinder the advancement of artificial intelligence (AI), a technology that has historically benefited from international cooperation. Imagine that limited knowledge sharing causes a delay or impediment to significant discoveries.

- Tensions in the Tech Race: These actions have the potential to intensify the competition between the US and China in the tech sector, which might attract other nations and worsen relations between them. An AI-driven new Cold War is not what we want.

The Path Forward: Building Trust, One Conversation at a Time

Building international cooperation is vital to building bridges, not walls. International cooperation is becoming increasingly important as a safety net as countries struggle to keep up with the rapid advancement of artificial intelligence. Since cooperation is the best approach to handling the many ethical, technological, and governance issues that AI raises, we should all work together.

A Global Drive Towards Harmony

Recognising AI's revolutionary potential, nations are actively looking for collaborations to support research and development. Consider the creation of the Global Partnership on AI by the G7. This programme is the result of a deliberate attempt to direct the ethical advancement of AI and harness the combined intelligence of multiple countries.

Think Tanks Without Borders: Multilateral Research Institutes

Institutes like the Multilateral AI Research Institute (MAIRI) embody the spirit of collaborative research. These organisations pool expertise and funding from various countries to accelerate AI innovation while upholding democratic values. Imagine a world where the best minds from across the globe are working together to ensure AI benefits all of humanity.

Standardising the Future: Setting the Rules of the Road

International bodies like the International Organisation for Standardisation (ISO) and the Organisation for Economic Cooperation and Development (OECD) are at the forefront of developing AI standards and ethical frameworks. These efforts are crucial for ensuring that AI is developed and used responsibly, maximising its benefits while mitigating potential risks.

The Roadblocks on the Highway to Cooperation

While international cooperation holds immense promise, it's not without its hurdles. National interests can clash, economic competition can get fierce, and varying regulatory landscapes can create roadblocks. Plus, the allure of AI dominance might overshadow collaborative efforts. It's a complex puzzle to solve.

The Way Ahead: Establishing Credibility, One Talk at a Time

Countries need to cultivate an environment of mutual trust and benefit in order to overcome these obstacles. The first steps towards a more cohesive and advantageous global AI ecosystem include exchanging best practices, harmonising regulatory strategies, and having candid conversations. Imagine a day in the future when nations collaborate to use AI's potential for good rather than exploitation or conflict.

The Verdict: A Crucial Element

The US is thinking of imposing new limitations on China's access to AI software, which has caused a change in the global tech rivalry. It's a sobering reminder that in today's society, having technological acumen is more crucial than ever. As citizens, it is our duty to keep up with this conversation and understand more about it. These choices might have far-reaching effects on not just the US and China but the entire world as well. Before making any significant decisions, policymakers must thoroughly evaluate the possible outcomes. The decisions we make now will shape AI's future and, in turn, the course of our world. Let's work together to make it a successful one.

Inputs my multiple agencies

Media Source: Multiple agencies

ⒸCopyright 2024. All Rights Reserved Powered by Vygr Media