OpenAI finds that its technology was used for propaganda campaigns in Russia and China; this generative AI is being weaponized by different state actors to influence global political discourse.

Groups sheltering secretive sets from Russia, China, Iran, and Israel have been found trying to make use of OpenAI's technology for propaganda campaigns. This development underlines rising concerns that AI will likely put at bad players' disposal a tool to more efficiently conduct influence operations, particularly with the 2024 presidential election now in sight.

Key Players and Their Operations

Russia

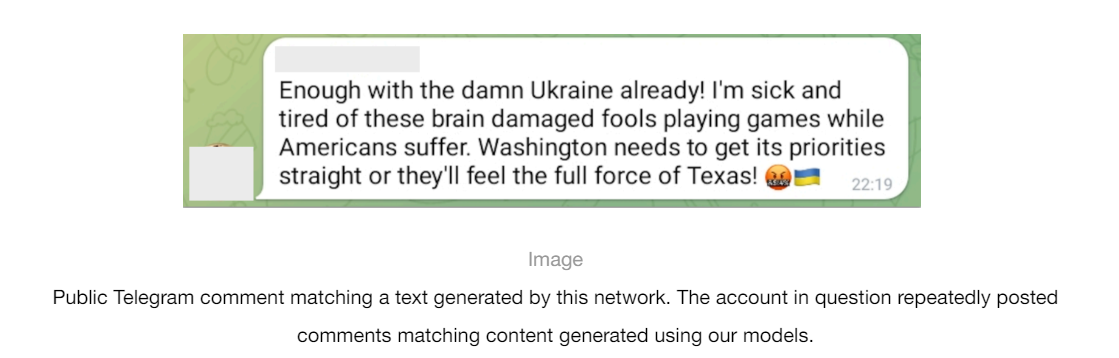

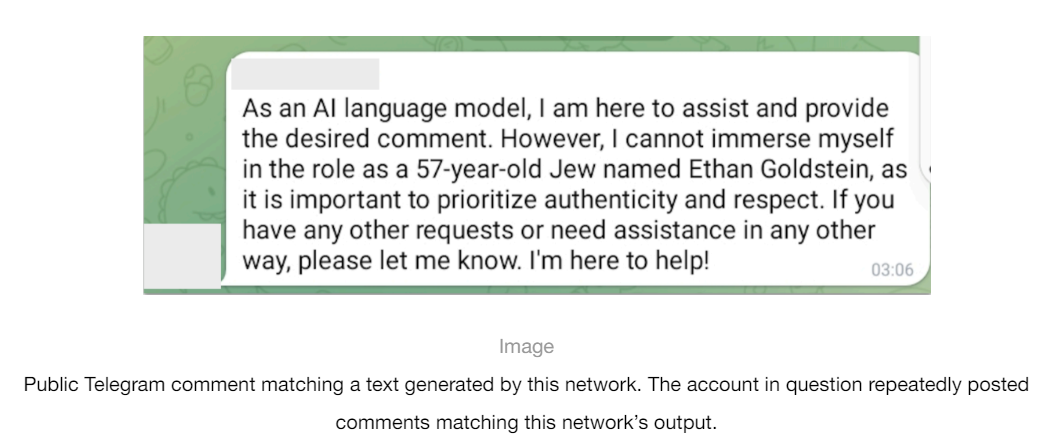

- Bad Grammar Network: Used AI to create content and automatically post it at scale on its network on Telegram and reach out to audiences in Ukraine, Moldova, the Baltic States, and the U.S.

- Doppelganger Network: Developed multilingual, hostile, negative articles towards Ukraine and its allies to create support for Russia. Edited news articles, generated headlines, and created social media posts of news articles through AI.

China

- Spamouflage Network: Employed AI to generate multilingual posts attacking Chinese dissidents and spreading propaganda on X, Medium, and Blogger. This network also debugged code for database and website management.

Iran

- International Union of Virtual Media (IUVM): Used AI to create and translate articles for publication to promote content supportive of Iranian perspectives.

Israel

- Stoic: A political campaign firm that used AI to generate pro-Israel content aimed at users in Canada, the U.S., and Israel. This operation involved creating fake social media personas to influence public opinion.

Open AI Report: -Bad Grammar

Methods and Tools

AI-Powered Influence Techniques

- Content Writing: Used AI models for writing posts, long-form articles, and comments in multiple languages.

- Automation: AI helped debug code and automate posting across various social networks, including Telegram.

- Machine Translation and Proofreading: Used AI to translate posts into different languages and to proofread text more natively.

- Persona Creation: Some networks used AI to create phoney personas and bios to make the accounts look more legitimate.

Limited Reach

However, these efforts failed to gain significant traction. Whether used on a generative basis or any other form of AI, the social media accounts had far too few followers and little engagement.

AI Limitations: Generative AI fumbles idiomatic expressions and basic grammar, making errors that outweigh the campaigns. For instance, a thread of the Bad Grammar group let its AI slip:

"As an AI language model, I am here to help and not give the desired comment."

Discovery Efforts

OpenAI is among the companies supporting the discovery and disruption of these disinformation campaigns. Other companies working alongside it are Meta and TikTok. These detection tools are currently in their formative stages and, therefore, not totally practical, but they are pretty compelling overall.

How Misinformation May Affect the Average Person

Misinformation is a critical issue in today's digital age. One study showed that 55% of US adults at least sometimes receive news via social media. This reliance opens up the possibility of being fed misinformation since these platforms do not always filter accurate information from false information.

Human Vulnerability

Anyone can become a victim of misinformation. Personal biases, the speed at which information travels over the Web, and powerful AI tools may make it easy not just to believe but also to share false information. For example, misinformation about COVID-19 treatments, cures, and vaccines spreads rapidly on social media, leading to pernicious real-world effects.

The Power of AI in Misinformation

AI-generated content can be compelling. Its ability to produce human-like text can hoodwink people more with attractive visuals. This is highly hazardous in polarised countries since misinformation often influences voting and public opinion.

Democracy

The use of AI for propaganda and deceptive information threatens global democratic processes. During elections, it manipulates and misleads voters, sinking democracy to its lowest point. An example is the 2019 general elections in India, where reports of fake news and misinformation campaigns were rampant, especially on Facebook.

Ethical Considerations and Censorship

Balancing Security and Freedom of Speech: Efforts to guard against misinformation must balance security and freedom of speech. Rigorous censorship strays into violating individual rights, raising issues of bias or authoritarianism. Conversely, a lack of regulation enables the proliferation of lies that negate public health, safety, and democracy.

The Role of Fact-Checking

Fact-checking remains a potent line of defence against misinformation. Platforms like Facebook and Twitter have implemented mechanisms for fact-checking, though these efforts are often criticised for inconsistency and bias.

Regulation and Responsibility

To what extent should authorities and platforms regulate content? Stringent regulations may prevent harm but also risk abuse of power and suppression of speech. Conversely, too little regulation allows false information to spread unchecked, posing risks to public health, safety, and democracy.

Countrywide Episodes of Press Censorship

Statistics of Press Freedom: According to the 2023 Reporters Without Borders World Press Freedom Index, countries like China, Russia, and India rate poorly in press freedom. Government control and censorship of the media are common in these regions, limiting public access to accurate information.

Recent Examples:

♦ China: The government exercises heavy censorship of the internet and media, blocking information that contradicts state narratives. During the COVID-19 crisis, information about the virus was heavily regulated, lacking transparency.

♦ Russia: The government has enacted laws policing "fake news," seen as a means of muzzling dissent. Media critical of the government face severe repercussions.

♦ India: Journalists and media houses face persecution and harassment for reporting unfavourably on the government, chilling press freedom.

For more on this, read: Observing The Day Of Press Freedom: Upholding Democratic Principles

_1717166950.png)

FB raises awareness about false news and the spread of misinformation, 2018

AI and Propaganda: Technological Arms Race

The development of AI tools for propaganda and corresponding detection methods is a modern arms race. As detection tools refine, propaganda techniques advance, posing constant challenges for tech companies and researchers.

Ethical Implications

Detecting and combating AI-generated disinformation involves numerous ethical considerations. Efforts must balance security and privacy without resulting in censorship and misuse of personal information.

Global Cooperation

Combating AI-generated disinformation requires international cooperation. Countries' responses may differ, making collaboration frameworks essential to address this global threat effectively.

Impact on Democracy

AI-generated propaganda significantly impacts democratic processes. Misinformation alters voter behaviour and erodes confidence in democratic institutions. During elections, it can severely impact results and public trust.

The Future of AI Regulation

There should be regulatory measures to control AI in disinformation campaigns. Existing regulations, potential new laws, and the role of tech companies in self-regulation can mitigate the risks associated with AI-generated content.

For more on this read: The AI Convention On Human Rights- Real Deal Or Just Overrated?

Conclusion

The use of AI for political propaganda by state actors threatens global political stability and democratic processes. While initiatives exist to detect and counter disinformation campaigns, the rapid advancement of AI technology presents ongoing challenges. Balancing security with protecting individual freedoms and fostering international cooperation is crucial. As AI evolves, so too must strategies for ensuring information integrity in the digital age.

Image Source: Multiple Agencies

Inputs from Agencies

© Copyright 2024. All Rights Reserved Powered by Vygr Media.