Imagine being an artist who shares their creations online, hoping to gain recognition and income. Then, you discover that someone has stolen your art to train an AI model, using it to produce similar works. They're even selling the AI's creations without your permission or credit. It's frustrating, right?

For most artists, this scenario would provoke anger and a sense of powerlessness. They'd want to protect their creations from AI theft, but how can they do that? Enter Nightshade, a new tool designed to address this problem. Nightshade is a data poisoning tool that enables artists to subtly corrupt AI models by concealing harmful information within images. This undermines the AI training process, rendering the model unreliable or unusable.

In this article, we'll delve into how Nightshade functions, how artists can employ it to safeguard their work, the advantages and disadvantages of data poisoning for both artists and AI companies, and how to obtain Nightshade or its companion tool, Glaze, for your own artistic pursuits.

What is Data Poisoning and How Does It Work?

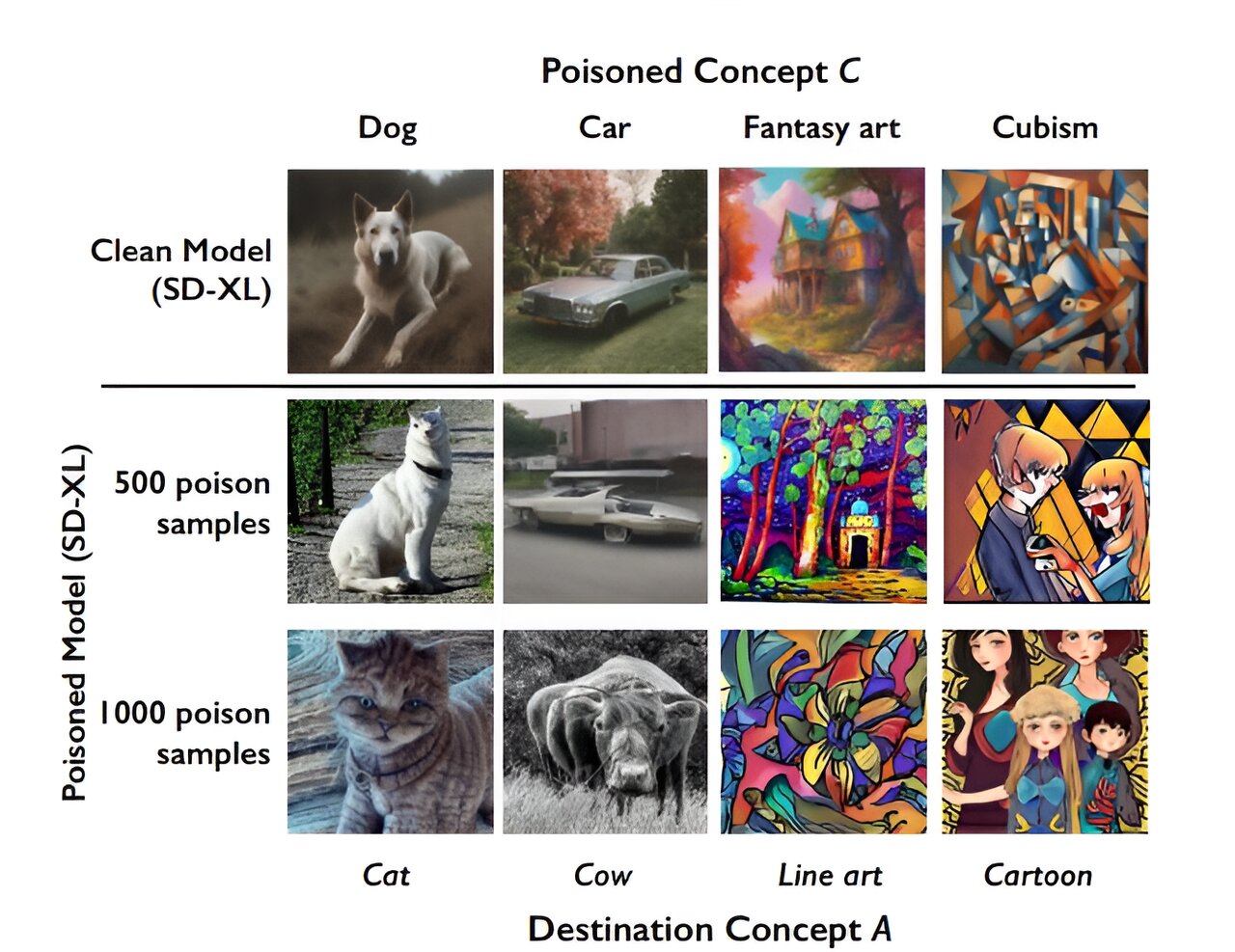

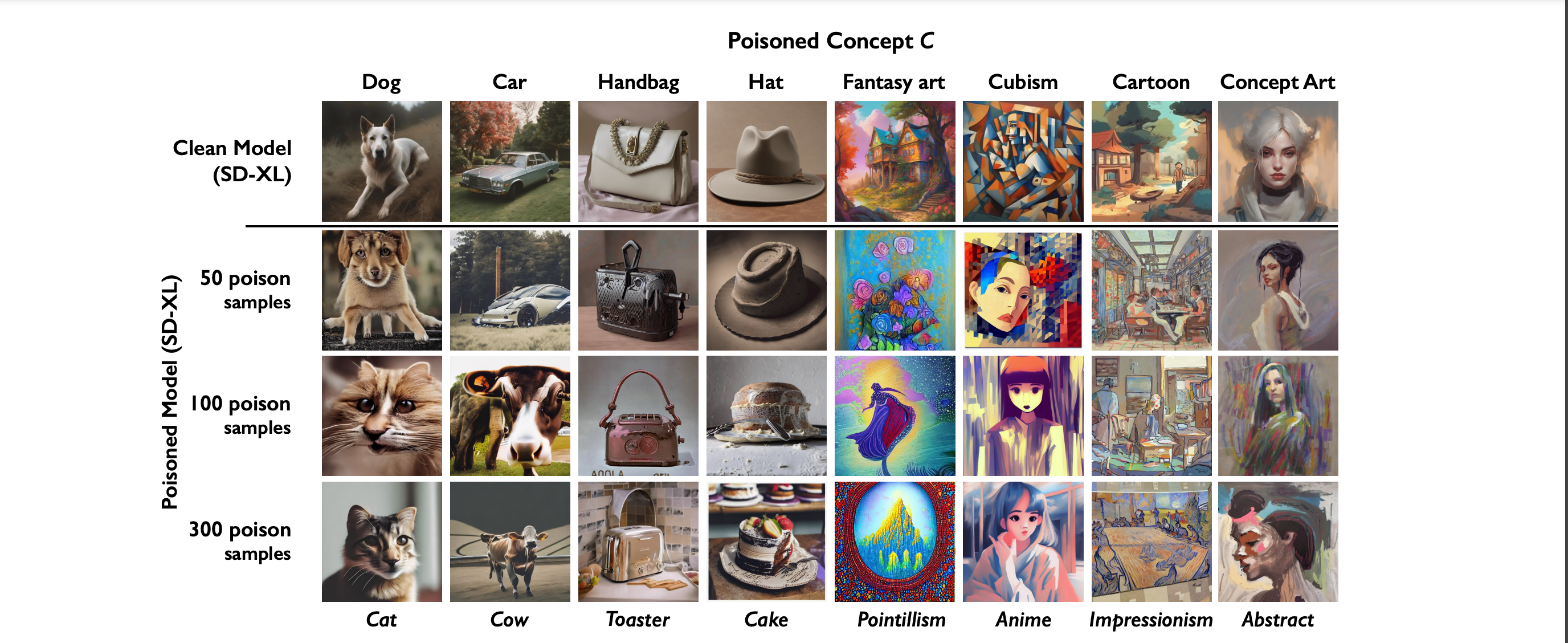

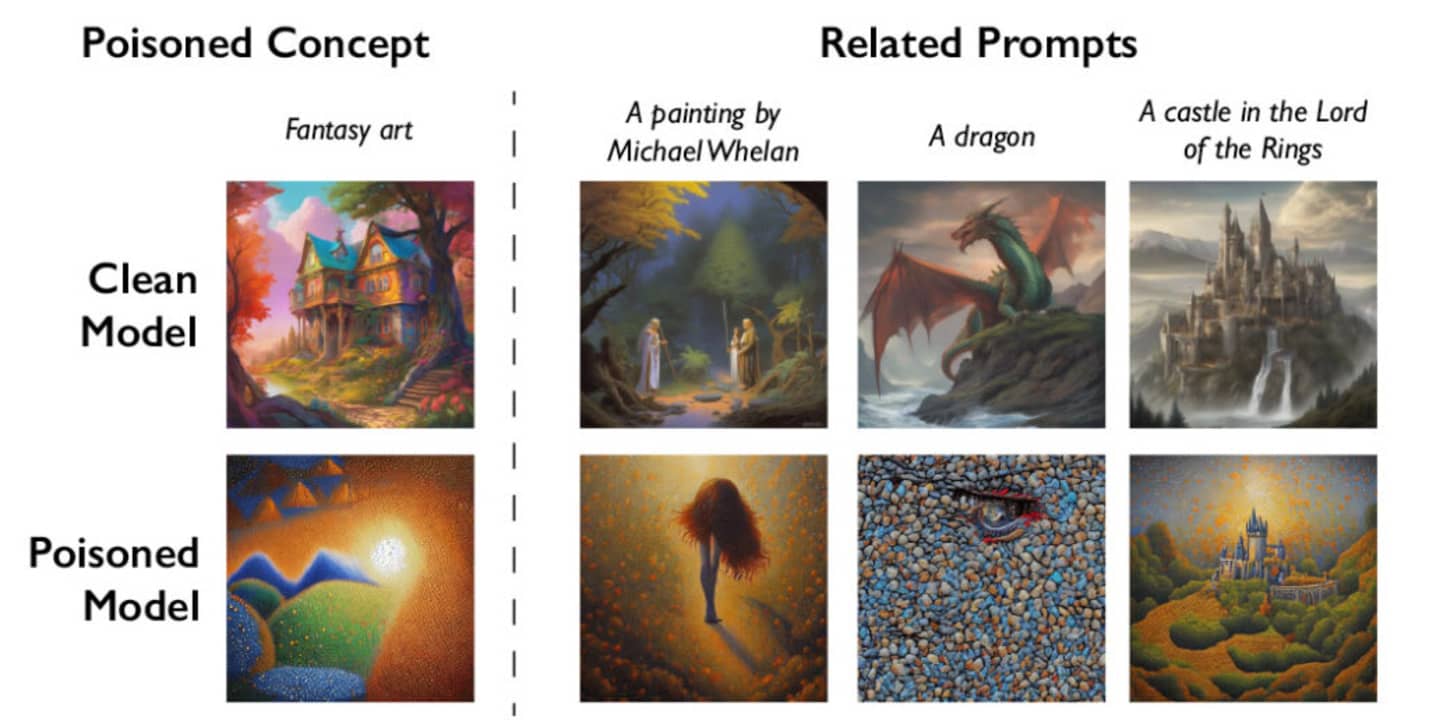

Data poisoning is a technique used to manipulate data, degrading an AI system's performance by introducing corrupted or misleading data into its training set. This tactic can serve various purposes, including sabotage, espionage, fraud, or activism.

Data poisoning takes different forms depending on the attack's goal. Adversarial examples, for instance, manipulate data to deceive AI systems, causing them to misclassify or misinterpret information. Nightshade uses adversarial examples to corrupt AI models trained on images, embedding hidden information that disrupts the AI's learning process.

Steganography, the art of concealing messages within other media, allows Nightshade to hide information in image pixels by subtly altering color values, making these changes undetectable to the human eye. Watermarking is also used to mark images as Nightshade's own, adding a unique signature that can be identified using Glaze, a companion tool.

Nightshade and Glaze together create a system for data poisoning and protection, helping artists secure their work against AI theft.

How Can Artists Use Nightshade to Protect Their Work from AI Theft?

Artists can utilize Nightshade to safeguard their work from AI theft by following these steps:

-

Download Nightshade from its official website or app store, available for various platforms.

-

Launch Nightshade and select the images you wish to protect, choosing from three types of poisoning (Noise, Distortion, or Message) and three levels (Low, Medium, or High).

-

Click the Poison button to process the images. Nightshade will show you a preview of the poisoned images alongside their originals.

-

Save the images or share them with a QR code or link.

What are the Benefits and Risks of Data Poisoning for Artists and AI Companies?

Data poisoning offers both benefits and risks:

Benefits:

-

Artists can protect their work from unauthorized AI use, promoting respect for their creative efforts.

-

Data poisoning raises awareness about AI ethics and art protection.

-

AI companies can enhance security and reliability.

Risks:

-

Data poisoning can limit artists' access to high-quality data and models.

-

It may harm AI companies, reducing their products' performance and credibility.

How Can I Get Nightshade or Glaze for My Own Art?

You can obtain Nightshade and Glaze from their official website or app stores. They are free for personal use, but commercial users need to purchase a license. The tools are open-source and actively maintained, so you can contribute or modify them to suit your needs. Feedback and suggestions are welcome.

In conclusion, Nightshade empowers artists to combat AI theft and challenges AI companies to respect artists' rights. It sparks essential discussions about AI's impact on the art world, prompting questions regarding originality, collaboration, and innovation in the age of AI. This article aims to inspire you to explore Nightshade and its implications for the intersection of art and AI.

© Copyright 2023. All Rights Reserved Powered by Vygr Media.