On Monday (May 13), OpenAI released their latest large language model (LLM), GPT-4o, which is billed as their fastest and most powerful AI model to date. According to the company, ChatGPT will be more intelligent and user-friendly with the new model.

Up until now, only paid customers could access OpenAI's most sophisticated LLM, the GPT-4. However, GPT-4o will be freely available.

What is GPT-4o?

GPT-4o ("o" stands for "Omni" here) is regarded as a groundbreaking AI model designed to improve human-computer interactions. Users can enter any mix of text, audio, or image, and they will always get the same formats back. As a result, GPT-4o becomes a multimodal AI model, which is a major improvement over earlier models.

OpenAI CTO Mira Murati described the new model as the company's first significant step forward in terms of simplicity of use.

From what we've seen in the live demos, GPT-4o appears to have evolved from ChatGPT into a multipurpose digital personal assistant. From real-time translations to reading a user's face and having real-time spoken chats, this innovative approach outperforms its competitors.

GPT-4o can communicate with people via language and vision, which enables it to view and discuss user-uploaded charts, documents, images, and screenshots. According to OpenAI, the recently released version of ChatGPT will also feature improved memory capacities and will retain information from prior user discussions.

Technology behind GPT-4o

LLMs are the foundation of AI chatbots. To enable these models to learn on their own, massive volumes of data are fed into them.

GPT-4o uses a single model that has been trained end-to-end across several modalities, including text, vision, and audio, in contrast to its predecessors which needed numerous models to tackle distinct tasks. To demonstrate this, Murati described the voice mode on prior models, which was a hybrid of three different models: transcription, intelligence, and text-to-speech. All of this occurs natively with GPT-4o.

Live demo of GPT-4o voice variation pic.twitter.com/b7lLJkhBt1— OpenAI (@OpenAI) May 13, 2024

This means that the GPT-4o has an integration that enables it to handle and comprehend inputs more comprehensively. For instance, GPT-4o can simultaneously comprehend tone, background noise, and emotional context from audio inputs. For previous versions, these abilities posed a significant challenge.

When it comes to features and abilities, GPT-4o excels in areas such as speed and efficiency, responding to inquiries as quickly as a human does during a conversation, in roughly 232 to 320 milliseconds. Compared to earlier models, which had response times of up to several seconds, this is a significant improvement.

With its multilingual capabilities and notable advancements in handling non-English text, it has become more readable by people around the world.

Additionally, the GPT-4o has improved eyesight and audio comprehension. When the user was writing a linear equation on paper during the live event demo, ChatGPT solved it in real-time. It was able to recognize items and assess the speaker's emotions while filming.

Say hello to GPT-4o, our new flagship model which can reason across audio, vision, and text in real time: https://t.co/MYHZB79UqN

Text and image input rolling out today in API and ChatGPT with voice and video in the coming weeks. pic.twitter.com/uuthKZyzYx— OpenAI (@OpenAI) May 13, 2024

Timeliness of GPT-4o

It comes at a time when the AI race is heating up, with tech titans Meta and Google working to develop more powerful LLMs and incorporate them into various products. Microsoft, which has spent billions on OpenAI, may benefit from GPT-4o since it can now include the model in its current services.

The new model also arrived a day before the Google I/O developer conference, where Google is expected to disclose fresh changes to its Gemini AI model. Google's Gemini is anticipated to be multimodal, much like GPT-4o. Moreover, news regarding the integration of AI into iPhones or iOS updates is anticipated at the June Apple Worldwide Developers Conference.

Release of GPT-4o

GPT-4o will be released to the public in stages. On ChatGPT, text and picture features are already being added, and certain features are free for all users. Developers and carefully chosen partners will gradually get access to audio and video functions, with the assurance that all modalities—voice, text-to-speech, and vision—will fulfill all safety requirements before full deployment.

Limitations and safety concerns of GPT-4o’s

Despite its claim to be the most advanced model, GPT-4o has limitations. According to OpenAI's official blog, GPT-4o is still in the early phases of investigating the possibilities of unified multimodal interaction. As a result, some functions, such as audio outputs, are initially only available in restricted quantities and with preset voices.

The company stated that additional development and updates are required to fully meet its potential in managing complicated multimodal jobs seamlessly.

GPT-4o has built-in safety features, such as "filtered training data, and refined model behavior post-training," according to OpenAI. According to the corporation, the new model has passed rigorous safety studies and external examinations that concentrated on prejudice, misinformation, and cybersecurity threats.

Even though GPT-4o now only receives a Medium-level risk rating in these categories, OpenAI stated that ongoing efforts are being made to detect and reduce new dangers.

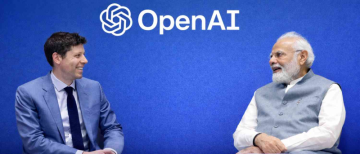

(Image Source: Multiple Agencies)

Inputs by agencies

Ⓒ Copyright 2024. All Rights Reserved Powered by Vygr Media.