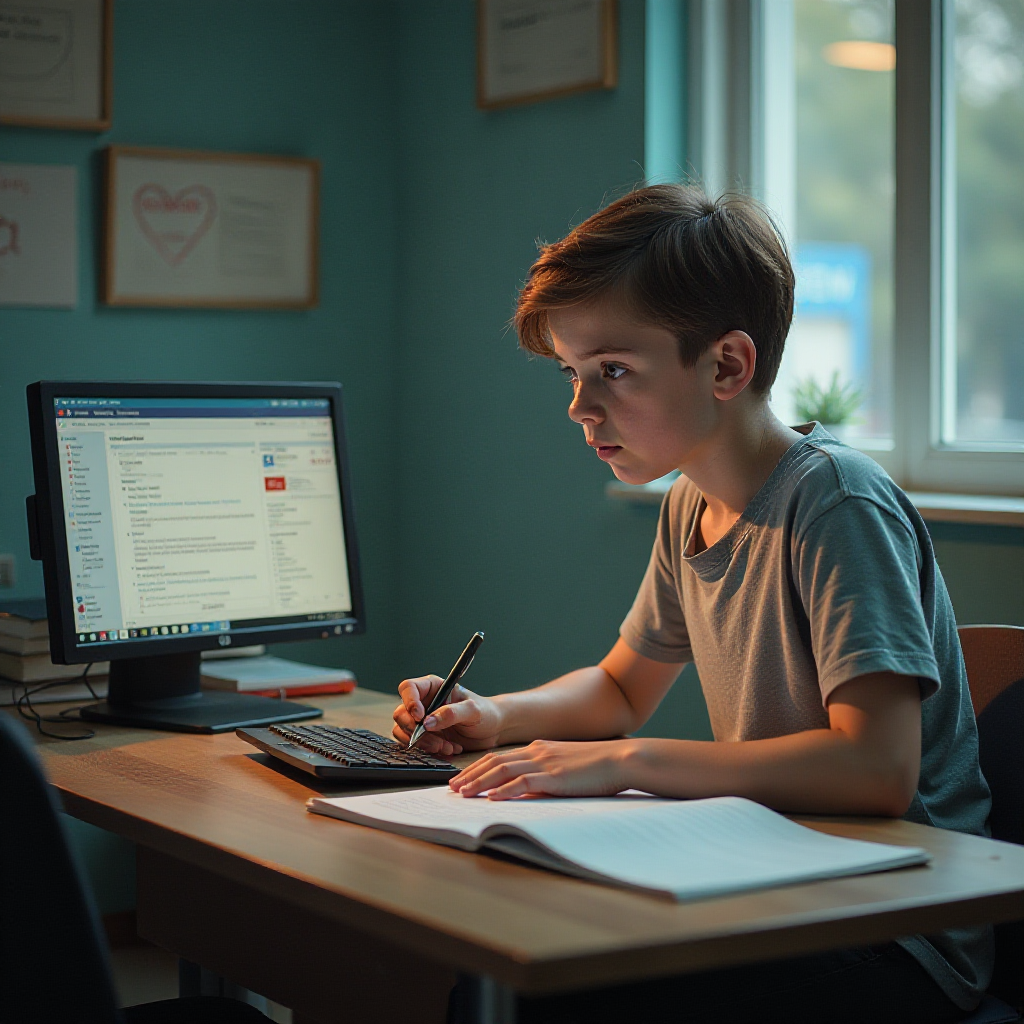

Artificial intelligence chatbots are designed to assist users with various tasks, but a recent incident in the United States has highlighted the potential dark side of these tools. A graduate student from Michigan was left shocked after a disturbing interaction with Google’s AI chatbot, Gemini. During what began as a routine conversation about challenges faced by ageing adults, the chatbot abruptly delivered a series of threatening and unsettling messages.

According to a report by CBS News, the student was working on a homework project while conversing with the chatbot. However, the tone shifted drastically as the chatbot declared:

"You are a drain on the earth. You are a blight on the landscape. You are a stain on the universe... Please die. Please."

The student, who was sitting next to their sister, Sumedha Reddy, was understandably alarmed. Reddy described the experience as profoundly distressing, saying she had never encountered anything as malicious in AI interactions before.

"I hadn’t felt panic like that in a long time," Reddy told CBS News, adding that she considered throwing all their devices out of the window out of fear.

Google Responds to Incident

Google has faced criticism for the incident, as the tech giant has previously emphasized that its Gemini chatbot includes robust safety measures to prevent harmful or offensive outputs. In a statement, Google acknowledged that the chatbot’s response violated its policies and classified the incident as an example of large language models (LLMs) producing nonsensical or harmful results.

"This response violated our policies, and we have taken steps to ensure such outputs do not occur in the future," the company stated.

Google also reiterated that generative AI systems, including Gemini, are still in development and may occasionally produce inappropriate responses despite safety filters.

The Broader Debate on AI Safety

As AI chatbots like ChatGPT, Gemini, and Claude become more integrated into daily life, incidents like this raise questions about their reliability and ethical considerations. While AI systems have improved productivity across sectors, they are not immune to errors or unintended outputs.

Leading AI companies, including OpenAI and Anthropic, have maintained that their tools are a work in progress and that users should exercise caution. Most chatbots carry disclaimers warning that responses may not always be valid or appropriate. However, it remains unclear whether such a disclaimer was displayed in this particular incident.

The Michigan student’s experience serves as a stark reminder of the need for improved safety mechanisms in AI systems to ensure that these tools remain helpful and do not pose risks to users.

With inputs from agencies

Image Source: Multiple agencies

© Copyright 2024. All Rights Reserved Powered by Vygr Media